Alexa Champions is “a recognition program designed to honor the most engaged developers and contributors in the community”. In a previous post, we revealed that there were some very well rated posted hidden several hundred pages deep in the Alexa marketplace. Eric Olson, co-founder of 3PO-Labs and Alexa Champion, pointed out that all 3 of these skills were by Alexa Champions. It seems odd for skills from champion developers to be rendered essentially undiscoverable by the very company that features them. This raises the question of whether Alexa Champions have any objective advantage over regular developers. We already looked at skills’ ratings, skill ranking on the marketplace, and number of ratings per skill. Today, we will look at the number of reviews for Alexa Champion skills.

Analysis

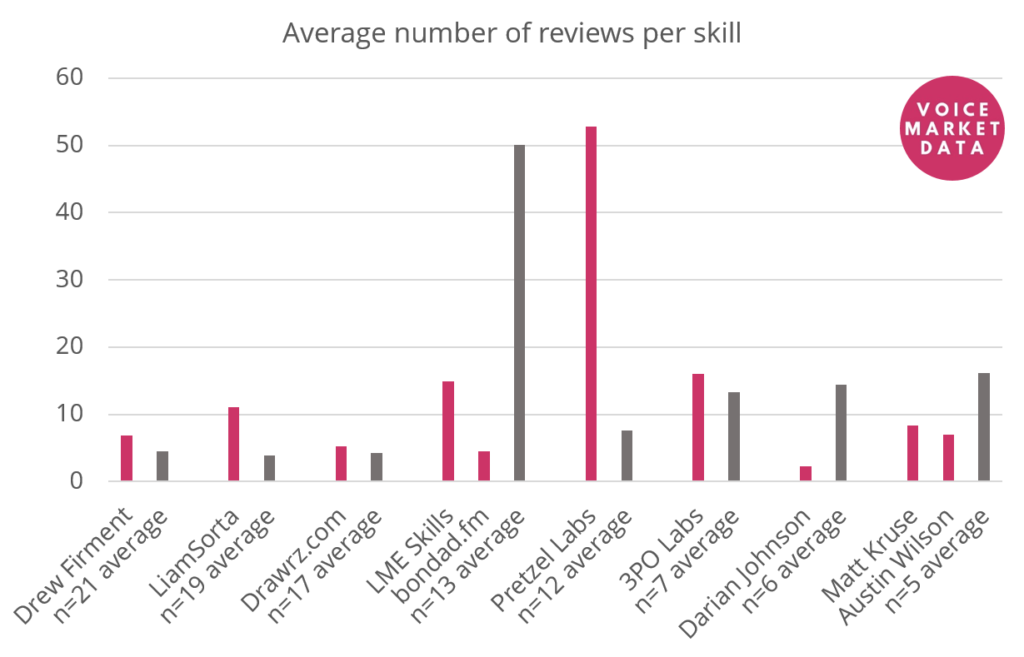

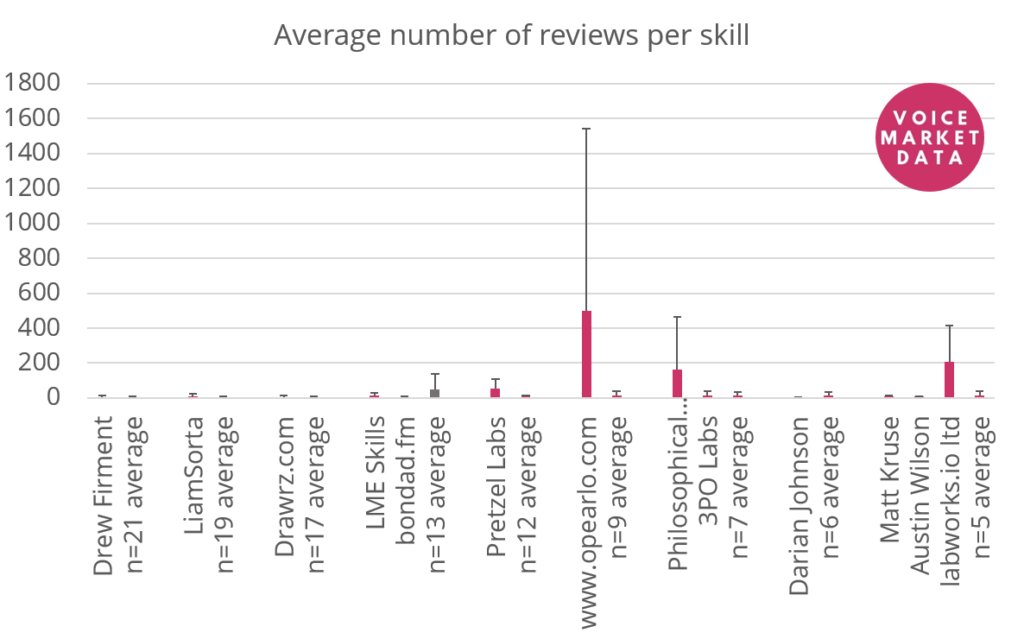

Below are results for 13 Alexa Champions. Our analysis did not include Invoked Apps because their wildly successful skills make them an extreme outlier. We excluded VoiceXP because their 36 skills makes it hard to match the developer with an industry average (there is only one other developer with 36 skills). We also excluded 9 developers for only having 1 or 2 skills. Mark Tucker’s skills are distributed across a few developers, so unfortunately, his were also excluded from the analysis.

In the graphs, you will see the name of the Alexa Champion developer next to “n=# average”. This represents the average of all developers with “#” number of skills (same number that the Alexa Champion published). The black lines represent the variability of the average. If the bar is small, that means most data points were close to the average. A larger bar means that the data points were far from the average (either more or less).

Number of reviews

The number of reviews is arguably the most meaningful metric of engagement we can get from the Alexa marketplace. Leaving a review means the skill was engaging (or frustrating) enough for someone to spend some time communicating their opinion. Our initial graph is similar to what we saw for our analysis in the number of ratings. Some developers have significant variability because of at least one very popular skill, making the entire graph impossible to understand. Congrats to Opearlo, Philosophical Creations, and labworks.io for doing a great job, but we need to remove your data. If you look closely, you’ll notice that the scale (y-axis/up-down) goes to 1 800, but it went up to 12 000 for the number of ratings. Skills receive a much smaller number of reviews because they require so much more effort.

Once we remove Opearlo, Philosophical Creations, and labworks.io, the 10 remaining Alexa Champions and their matched averages become easier to see. We were wondering whether Alexa Champions consistently receive more reviews , which could suggest that their skills have a more engaged userbase. Looking at these averages, there doesn’t seem to be a clear trend. Alexa Champion review count averages can be larger, smaller, or about the same as non-Champions. Only a small minority of Alexa Champions have at least one very popular skill. Because the number of reviews is much harder to artificially increase (though not impossible), would Amazon benefit from giving the title of Alexa Champion to a developer who has already established themselves as a comparable, or even more, developer with an engaged user base? What does it even mean to be an Alexa Champions?! ????